Ryan Nichols

Kristoffer Nielbo

Welcome to CERC’s clearinghouse site for resources in the field of quantitative textual analysis. This welcome page is intended to answer common questions of our visitors. See the right menu for topics.

What is quantitative textual analysis? The term ‘quantitative textual analysis’ here will refer to methods for the study of texts that extend beyond (though sometimes incorporating) traditional humanistic ‘close readings’. For example, whereas a traditional Latinist might spend years pursing answers to a set of research questions about Ovid’s corpus, the quantitative analyst might instead process that corpus and run a topic model on it in an effort to address the same or similar research questions.

What’s the point of this site? The first purpose of this set of CERC pages is to offer humanists opportunities to reflect on the value of additions to their methodological toolkits that will support more analytical, quantitative queries. Related, the second purpose of these pages is to provide demonstrations–often hands-on, with supporting documentation or videos–of the techniques and software tools that allow humanists to ask better questions and test our answers to them.

What is the intended audience? The intended audience for CERC’s QTA pages is the traditional humanist who feels a nagging suspicion that traditional research produced by the method of ‘close reading’ on his or her research questions is missing something, is incomplete. As a result, we will be writing pages that contain only necessary technical details, and we will be featuring software tools that necessitate a minimum of programming. (For the most part, no programming, even in visual languages, will be needed to make use of the tools we plan to highlight.)

Why this now? The need for a unified set of pages on quantitative textual analysis arises due to shortcomings in the digital humanities literature. Digital humanists either do not use or underuse most of the tools we will discuss. We hope to use CERC’s interdisciplinarity to our advantage this year to demonstrate how humanists can greatly enhance their research by making use of software that only corpus linguists tend to use, for example.

How can I get involved? Submissions for guest posts are very much welcomed, as are requests for topical posts. If you would like to get involved, please email me at the address below with a description of your intended involvement. In addition, I welcome your correspondence and feedback.

Ryan Nichols

rnichols [at] exchange.fullerton.edu

Quantitative Textual Analysis vs. Business as Usual in the Humanities

by Dr. Ryan Nichols, Philosophy, Cal State Fullerton, Orange County CA

Humanists produce most research in the humanities with the same ‘close reading’ methods that have been in place for thousands of years. Close reading allows a deep understanding of difficult source texts. Only by doing a close reading does a researcher reveal subtle meanings or hidden truths about a text. Often a humanist’s close reading of a text takes advantage of her training in specialty languages, adding a layer of importance to her research activities.

Page from 12th c. edition of Tale of Genji. Source: Wikipedia Commons.

Despite these advantages, the close reading method has a number of limits that become increasingly apparent in an age of big data. Traditional methods suggest that humanists might not see the forest for the trees as they focus on a single work or, indeed, a small set of passages in a single work. The method appears undermotivated: just what is the value of its products remains unclear when other humanists often come to incompatible interpretations of the same passage or same text. Besides, close reading methods cannot be deployed on large databases of literature.

Knowledge of the limits of traditional methods in the study of the history of philosophy comes from personal experience. Without clear standards, scholars swap ever more strained interpretations of a work, a passage or, worse, a ‘doctrine’. I put years of research time into the study of Thomas Reid—an eighteenth-century Scottish thinker. I read extensively in this period, conducted archival research in Scottish libraries, dutifully wrote several articles, and published a book about it, Thomas Reid’s Theory of Perception, with OUP.

Meh.

Historians of philosophy continue to debate questions such as, “Does Thomas Reid endorse a direct theory of perception?” and “What is Reid’s response to the Molyneux Problem?” My name might appear in the debate, but so what? The pointlessness of these and related ‘debates’ are not lost on others outside the humanities. Little progress happens in philosophy, and even less in the history of philosophy. But there is a way forward, one that uses the skill set of the close-reading scholar.

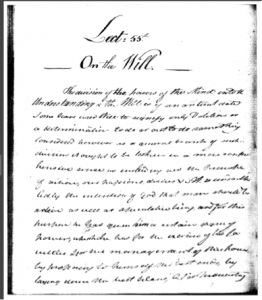

Lecture 55 of Thomas Reid’s Lectures on Natural Theology. Source: Author photo

Another reason for wanting to develop new text analysis tools has to do with the fact that humanities scholars now have access to an entirely new, unprecedented resource: enormous corpora of digitized texts, such as the HathiTrust and the Internet Archive. But for each big dataset, there are scores of smaller, boutique digital collections like Digital Thoreau, Chronicling America, which is a digital newspaper corpus, and the Middle English Compendium. Many other databases of texts are available but behind paywalls, for example, some libraries have subscriptions to Eighteenth-Century Collections Online. (While many digital databases of texts are free, this does not imply that they are plug-and-play ready. Sometimes a great deal of work is needed to pre-process texts to work with LIWC; in other cases, say with handwritten manuscripts, using LIWC is impossible unless the documents are transcribed.) A great deal of time and money has gone into creating these databases. HathiTrust, for example, has had to fight off a large, long lawsuit from Authors Guild. But despite this, academics seem to use these sources as little more than glorified concordances, failing to see that having this much text digitized provides the opportunity for completely new sorts of automated, large-scale analyses.

The purpose of this series of articles is to provide humanities scholars with practical introductions to methods of textual analysis and to supporting software tools in an effort to enable those who want to move beyond traditional methods to do so. This clearly does not imply that close readings are behind us or that they are unnecessary; far from it. While we will attempt to focus on religious texts where possible, we envision these posts for scholars at large who want to move beyond or supplement traditional methods rather than for scholars of religion alone.

We have benefitted from a number of digital humanities resources in our own work, but the DH world is crowded with tools, many of uncertain or untested utility, buggy or poorly designed. Two CERC members took a course at DHSI in Victoria on SEASR, for example. We found the visual programming language clunky and its engine far too buggy to use.

With few exceptions we will be looking at useful tools that a humanist with no programming background can use without paying high opportunity costs. This group will include freeware, shareware and some programs that require a purchase. Anticipated members of this set of tools include the Linguistic Inquiry and Word Count, Rapid Miner, AntConc, Lexomics and more. Where possible we will link to appropriate, freely available online videos that demonstrate key features of the software tools we discuss. Where not possible, we will make our own, as in our article about the Linguistic Inquiry and Word Count.