Kristoffer Nielbo & Ryan Nichols

Techniques for classifying documents apply supervised learning algorithms to a research corpus. Their purpose is to build a classifier that can predict the class of new documents and test competing classification schemes (Baharudin, Lee & Khan 2010). While an unsupervised learning algorithm searches for document groups within the research corpus without the use of class values, a supervised learning algorithm learns to map a collection of documents, such as the New Testament corpus, onto pre-set categorical class values or labels, like ‘Historical’, ‘Pauline’, and ‘Non-Pauline’. When the classifier is trained—that is, when the algorithm has learned the mapping—it can be applied to other texts, like the Gospel of Thomas, to determine their class value. Alternatively, several classifiers can be trained on different classification schemes (three classes vs. four classes), and their performance can be compared to estimate the best fit.

For document classification, the research corpus is divided into a training set, which the model uses to learn the mapping, and a test set, which is used to validate the classifier. Both sets consist of input data and class values. The input data can be any numerical representation of a document collection, like a document-term matrix or distance matrix, and the class values are a set of categorical labels. During training, the classifier learns the correct document-class mapping through repeated cycles of input presentation, class output, and, finally, adjustment of the model according to correct class value. This is where the concept of supervised learning comes from. When the classifier has learned to map document-class mapping satisfactorily, we can give it a set of test documents to estimate how well it performs on unseen documents.

Instead of comparing the books of New Testament clusters to the traditional classes of Historical books, Pauline Epistles, and Non-Pauline Epistles, it is possible to train a classifier using these class values to inspect its performance. In this example, we used a gradient descent algorithm to train a multilayered feedforward neural network. Sounds impressive, but really it is just a type of classifier that is loosely inspired by what you and your brain do all the time (Gurney 1997; Hagan, Demuth & Beale 2002). To make the classification task a little more interesting, every book of the New Testament was segmented into slices of 100-words resulting in a total of 1821 slices. The slices were preprocessed and transformed into a document-term matrix, which was subjected to 95% sparsity reduction, so that only words that occurred in 5% or more of the slices remained in the matrix. This resulted in a document-term matrix with dimension 1821 rows × 221 columns. 60% of the slices belonged to the Historical class, 24% to the Pauline class, and 16% to the Non-Pauline class. The supervised task was to learn the correct mapping between every 100-word slice and the New Testament book class to which it belonged. The data was divided into random samples so that 85% of the slices were used as a training set, while the remaining 15% were set aside for testing.

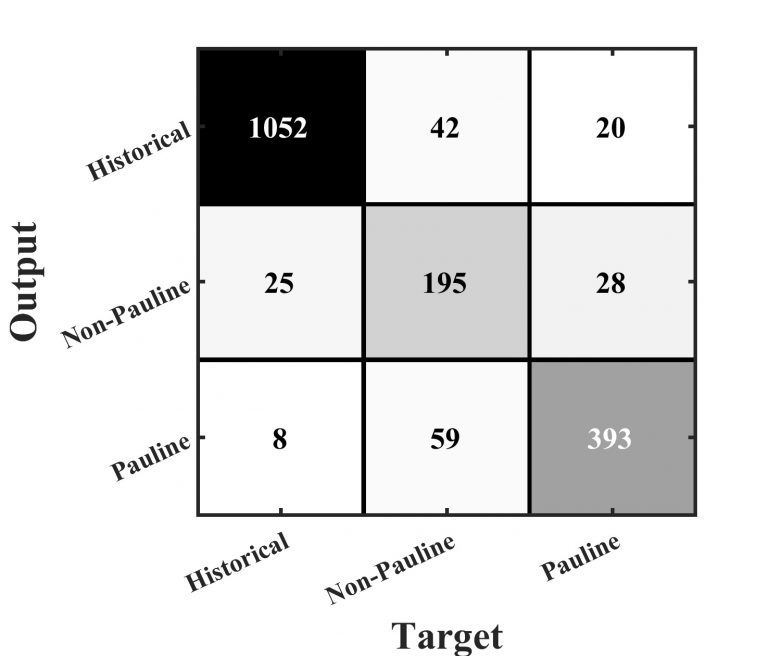

Figure 1: Confusion matrix. Estimation of the New Testament classifier’s performance. Target classes are those true classes of the ‘supervisor’, while output classes are the classifier’s predictions. Results are collapsed across training and testing to cover the entire set of slices.

Results of a classifier can be reported in several ways. Here we will use a confusion matrix that compares the classifier’s output class to the correct target class (figure 1). The main diagonal of the confusion matrix shows how many slices were correctly classified. Each entry outside the main diagonal shows how many slices from a target class (x-axis) were incorrectly assigned to another output class (y-axis). In general, the classifier performed quite well by correctly classifying 1640 slices out of 1821, which results in an overall predictive accuracy of 90%. This should be compared to a baseline accuracy of 60% that the classifier could obtain by predicting Historical for every slice. Interestingly, Non-Pauline is the most problematic class because the classifier only has 66% accuracy for the Non-Pauline slices (195 of 296 slices). Revelation is, in the traditional three classes, included in the Non-Pauline Epistles, while the clustering models consistently grouped Revelation with the Historical books. Similarly, a subset of the Non-Pauline Epistles was grouped ‘incorrectly’ with the Pauline Epistles. The classifier’s incorrect class assignments were caused by similarities it found in the word content. Finally, we divided the Gospel of Thomas into 100 word-slices (a total of 53 slices in the Lambdin translation) and applied the classifier to the slices. The classifier correctly identified the Gospel-like character of Thomas by assigning 94% of the slices to the Historical class.

What have we learned? What was the value of using document classification on the New Testament? Document classification text analytics provided confirmation of scholarly opinion about the common origins of many slices of the New Testament. Simultaneously, though, it also raised questions about the relationship between some slices and documents and others. When the text analyst passes the baton back to the traditional close reading scholar, the scholar would presumably want to zero-in on the subset of cases in which the classifier challenged traditional thinking about classification.